Docker has revolutionized the way we build, ship, and run applications. However, deploying Docker containers in a production environment requires careful consideration and adherence to best practices to ensure security, reliability, and performance. In this blog post, we'll discuss some key best practices for running Docker containers in production.

1. Use the official docker image as the base image

Use official and verified Docker images whenever available to ensure best practices and cleanliness in your Docker files.

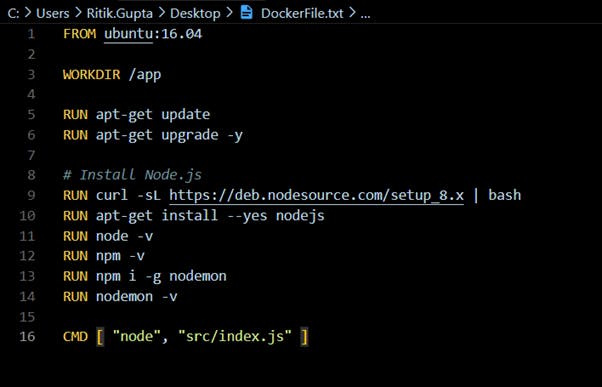

e.g., if you are developing a Node.js application and want to build it and run it as a Docker image, instead of taking a base operating system image and installing node.js npm and whatever other tools you need for your application, use the official Node.js image for your application.

instead of using the Ubuntu as base image

Use Official Node Image as Base Image

This will make your Docker file cleaner and also have the image built with best practices.

2. Use Specific Docker Image Versions

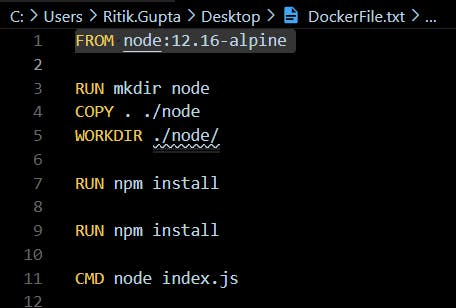

When deploying Docker containers in a production environment, it's important to use specific image versions rather than relying on the latest tag. This lowers the possibility of unexpected behavior resulting from changes in newer versions by ensuring that your application uses a known and tested version of the image.

The latest tag is unpredictable; we might get a version different from the previous build, and the new image version might break and have unexpected behavior.

To specify a specific image version, use the image tag corresponding to the version you want to use. For example, instead of using FROM nodeor node:latest, usenode:19.1.2 to ensure that your container runs version 1.19.10 of the node image.

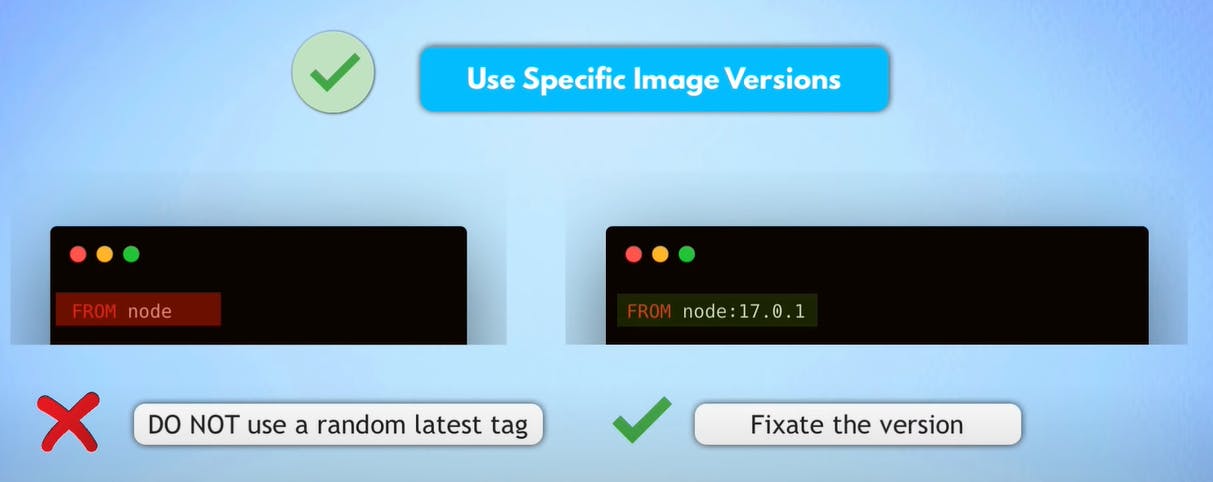

3.Keep Images Small

Minimize the size of your Docker images by using a multi-stage build process and removing unnecessary dependencies and files. Smaller images reduce deployment times and minimize the attack surface.

When selecting a base image for your Docker containers, consider using Alpine Linux for smaller image sizes and improved security. Alpine Linux is a lightweight Linux distribution designed for security, simplicity, and efficiency. Compared to full-blown OS images like Ubuntu or CentOS,

Using a full-blown operating system distro will come with a system utility package and various OS features that our image might never use, and that will lead to larger image size, more security vulnerabilities, & introduce unnecessary security issues from the beginning.

Smaller images mean less image space in the image repository as well as on a deployment server and faster image transfer while pulling and pushing from the repository.

4. Optimize catching image layers

Optimizing the caching of image layers can significantly improve Docker build times, especially for large projects with complex dependencies.

Make sure to order DockerFile commands from least to most frequently changing to take full advantage of catching.

Minimize Layer Size: Keep each layer as small as possible by combining commands, using && chain commands, and removing unnecessary files or dependencies after they are used.

5. Use .Dockerignore file

we don't require everything that we have in our repository.

Use.dockerignore File: Create a .dockerignore file in your project directory to specify which files and directories to exclude from the Docker build context. This can help reduce the size of the build context, which in turn improves build performance and reduces the likelihood of including unnecessary files in the image.

For example, we can exclude files like local development configurations, build artifacts, and temporary files by adding them to your .dockerignore file. Here's an example of a .dockerignore file:

6. Make use of Multi-stage builds

Utilize multi-stage builds to separate build dependencies from runtime dependencies. This approach helps reduce the size of the final image and improves caching by ensuring that only necessary artifacts are included in the final image.

In a multi-stage build, you can use one stage to build your application and another stage to package the application into a runtime image. This allows you to discard the build dependencies and intermediate artifacts, resulting in a smaller and more efficient final image.

Here's an example of a multi-stage Dockerfile for a Node.js application:

# Stage 1: Build the application

FROM node:14 AS build

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Stage 2: Create the final image

FROM nginx:alpine

COPY --from=build /app/build /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

the first stage (build) installs dependencies, build the application, and generates the production build. The second stage uses a smaller base image (nginx:alpine) and copies the build output from the first stage, resulting in a final image that only contains the production-ready application files.

7. Use the least privileged user

When running containers in production, avoid running processes as the root user to minimize the impact of potential security vulnerabilities. By default, when a Dockerfile doesn't specify the user, Docker uses the root user, which introduces a security issue.

Instead, create a new user within the Docker image with the minimum necessary permissions to run the application.

create a dedicated user and group

add a set of required permissions

Change the non-root user with a user directive.

# Create a new user

RUN addgroup -r myuser&& adduser -g myuser myuser

# permissions

RUN chown -R myuser:myuser/app

# Set the working directory and switch to the new user

WORKDIR /app

USER myuser

# Run the application

CMD ["npm", "start"]

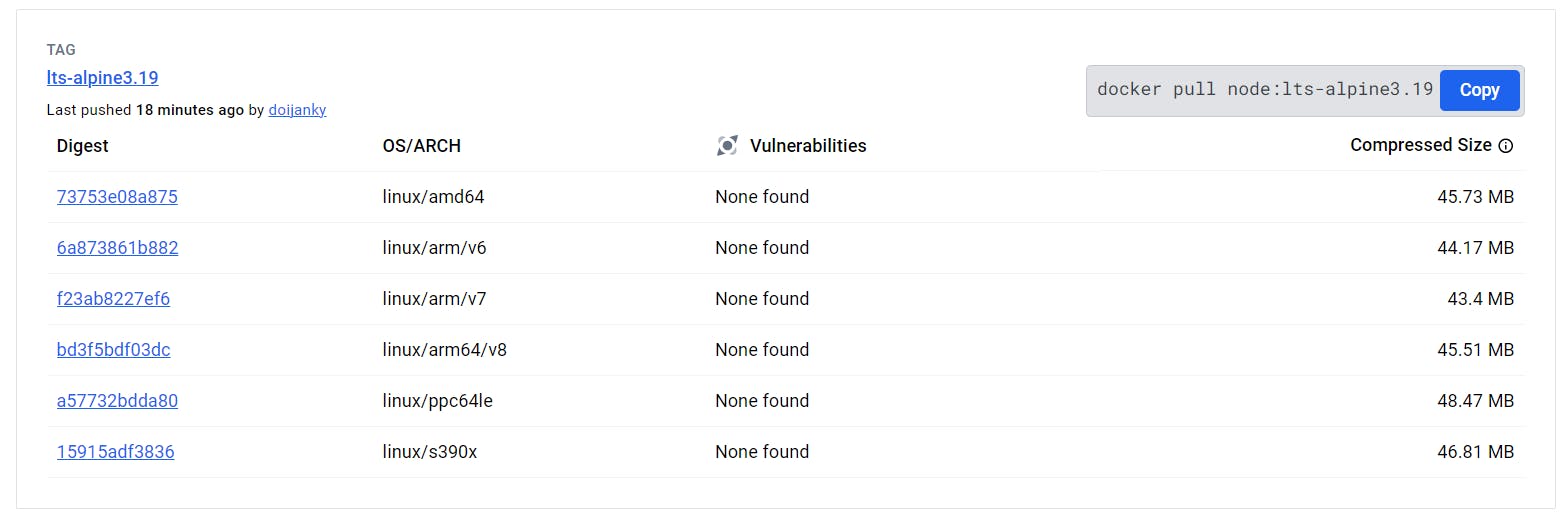

8. Scan the Image for Security Vulnerability

Use tools like Clair, Docker Security Scanning, or third-party vulnerability scanners to scan your Docker images for known security vulnerabilities. These tools can identify and report vulnerabilities in your images, allowing you to take proactive measures to mitigate risks.

Integrating vulnerability scanning into your CI/CD pipeline can help ensure that only images free from known vulnerabilities are deployed to production. Additionally, regularly updating your base images and dependencies can help mitigate the risk of newly discovered vulnerabilities.

Here's an example of how you can use Docker security scanning to scan an image for vulnerabilities:

docker scan <image_name>

There are several third-party tools available for scanning Docker images for security vulnerabilities. e.g. Aqua Security, Clair, Trivy, etc.

Last but not least, use Docker Swarm or Kubernetes for orchestration. For large-scale production deployments, consider using Docker Swarm or Kubernetes for container orchestration. These tools provide advanced features such as automated scaling, service discovery, and rolling updates.

Thanks for reading! I hope you understood these concepts and learned something.

If you have any queries, feel free to reach out to me on LinkedIn.